Cleaning up Commons part 1: images with borders

Wikimedia Commons has thousands of images that need to be cleaned up. These are organized into categories at Images for cleanup. While most of these categories require manual fixing, some of them can be partly or entirely automated (and should be fun programming exercises).

The Images with borders category was the first to catch my attention. Just look at it:

How hard could it be, right? Not very, but to make things just a bit easier I decided to work on the Plymouth bus subset (n = 380) first, as these all have similar (but not identical) borders.

Grabbing the images

The first step is to fetch a list of all the images in the Image with borders category, then select and download the Plymouth subset. This script does just that:

mkdir -p images_unprocessed

cd images_unprocessed

basequery="https://commons.wikimedia.org/w/api.php?\

action=query&format=json&\

prop=imageinfo&\

generator=categorymembers&\

iiprop=url&\

gcmtitle=Category%3A+Images+with+borders&\

gcmtype=file&\

gcmlimit=500"

apiquery=$basequery

# Query API

while [ -z $done ]; do

response=$($(echo "curl --silent $apiquery"))

urls+=( $(echo $response | jq ".query.pages[].imageinfo[].url") )

if echo $response | grep -q "\"gcmcontinue\":"; then

gcmcont=$(echo $response | jq ".continue.gcmcontinue")

apiquery=$(echo $basequery\&gcmcontinue=$gcmcont)

else

done=1

fi

done

# Grab all the files (or in this case all the ones from the Plymouth set)

for url in "${urls[@]}"; do

if echo $url | grep -q Plymouth; then

wget $(echo $url | sed -e 's/^"//' -e 's/"$//')

fi

done

cd ..Notice that multiple API calls are made. This is due to the 500 results limit

(it’s 5000 for accounts with the bot privilege set). The API returns a parameter

called gcmcontinue. By adding this to our next API call, the next batch of 500

will be returned. This is repeated until the API runs out of items to return (in

which point the gcmcontinue parameter won’t be included in the API’s response

anymore, which is the trigger that makes the script exit the for loop).

jq is used to extract the image URLs from the API query and add them to an array.

Once we have our array with the image URLs from all of the API calls, they are downloaded one by one using wget.

Cropping an image

As recommended by the {{Remove border}} template, jpegtran is used to do the cropping because it allows for lossless manipulation of JPEG images. Given that we’re going to crop hundreds of images manually (sorry, no fancy machine learning here), the processing pipeline needs to be as efficient as possible. This is what the procedure looks like for this image:

-

For each corner of the image, cut out a 32 by 32 pixel patch, blow it up to 512 by 512 pixels, and save it to disk (once with horizontal lines, once with vertical lines):

-

For each side of the image, concatenate the corners on that side into one image:

-

Show the sides one by one and prompt the user to select the first line that falls outside the border region (or falls exactly on the break between border and image). The lines are numbered from border to image. For example, the response for the bottom side of our example image would be “2”:

-

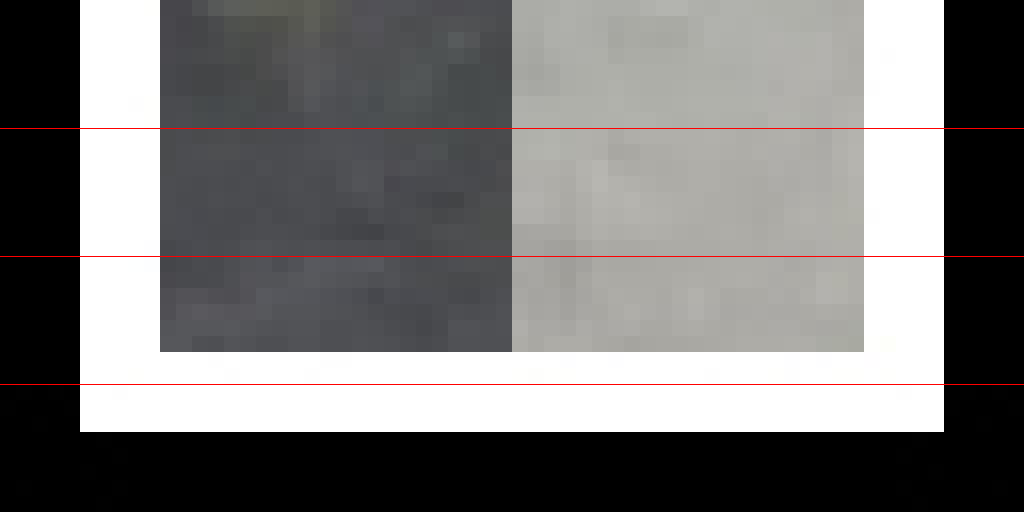

For the right and bottom sides, after the line has been selected, extract the strip that contains the break between border and image, blow it up, add lines for each row or column of pixels (depending on the orientation of the lines) and prompt the user to select the line that falls exactly on the break). For example, the response for the bottom strip would be “3”:

-

Crop image with the obtained parameters:

The reason step 4 is not performed on the top and left side of the image is that jpegtran can only cut at MCU boundaries for these sides. I suppose this is a limitation imposed by the JPEG standard itself.

Here’s a video of the whole procedure:

Of course, using feh to display the borders and strips, and capture user input

is a rather quick and dirty approach, but quite functional, which is what I was

aiming for after all.

The script that does all of the above:

# This function extracts a 32 by 32 pixel block from one of the image's corners,

# blows it up to 512 by 512 pixels, and draws some lines based on which we can

# tell in which section the break between the border and the image is located.

function corner {

# Get parameters

offsetx=$1

offsety=$2

lineori=$3

name=$4

# Crop, magnify, draw lines

if [ $lineori == 'horizontal' ]; then

convert ../$filename \

-crop 32x32+$offsetx+$offsety \

+repage \

-scale 512x512 \

-fill red \

-draw "line 0,128 512,128" \

-draw "line 0,256 512,256" \

-draw "line 0,384 512,384" \

$name.png

elif [ $lineori == 'vertical' ]; then

convert ../$filename \

-crop 32x32+$offsetx+$offsety \

+repage \

-scale 512x512 \

-fill red \

-draw "line 128,0 128,512" \

-draw "line 256,0 256,512" \

-draw "line 384,0 384,512" \

$name.png

fi

}

# This function crops the four sides to just the section that contains the break

# between the border and the image, and then enlarges this section so we can

# select the border with pixel level accuracy.

function zoom {

# What side?

side=$(wc -l cropparams | cut -d ' ' -f 1)

# Only run this function on the right and bottom sides (jpegtran can only crop

# the top and left sides at the JPEG MCU block level, usually 8 by 8 blocks).

if [ "$side" -gt 2 ]; then

# If right:

if [ $side -eq 3 ]; then

# Slice previously created border into strips.

convert right.png -crop 128x right_%d.png

# Read the cropparams file to learn which strip needs to be worked on.

strip=$((4-($(tail -1 cropparams)/8)))

# Draw lines on the strip.

convert right_$strip.png \

-fill red \

-draw "line 0,0 0,1024" \

-draw "line 127,0 127,1024" \

-fill "#808080" \

-draw "line 32,0,32,1024" \

-draw "line 64,0 64,1024" \

-draw "line 96,0 96,1024" \

-fill "#404040" \

-draw "line 16,0 16,1024" \

-draw "line 48,0 48,1024" \

-draw "line 80,0 80,1024" \

-draw "line 112,0 112,1024" \

tmp.png

# Display the strip using feh; catch the response (i.e. at which line the

# break between image and border is located) and write it to a file.

feh tmp.png --cycle-once \

--action1 "echo 8 >> cropparamsdetail" \

--action2 "echo 7 >> cropparamsdetail" \

--action3 "echo 6 >> cropparamsdetail" \

--action4 "echo 5 >> cropparamsdetail" \

--action5 "echo 4 >> cropparamsdetail" \

--action6 "echo 3 >> cropparamsdetail" \

--action7 "echo 2 >> cropparamsdetail" \

--action8 "echo 1 >> cropparamsdetail" \

--action9 "echo 0 >> cropparamsdetail"

# If bottom, idem:

elif [ $side -eq 4 ]; then

strip=$((3-(($(tail -1 cropparams)/8)-1)))

convert bottom.png -crop x128 bottom_%d.png

convert bottom_$strip.png \

-fill red \

-draw "line 0,0 1024,0" \

-draw "line 0,127 1024,127" \

-fill "#808080" \

-draw "line 0,32 1024,32" \

-draw "line 0,64 1024,64" \

-draw "line 0,96 1024,96" \

-fill "#404040" \

-draw "line 0,16 1024,16" \

-draw "line 0,48 1024,48" \

-draw "line 0,80 1024,80" \

-draw "line 0,112 1024,112" \

tmp.png

feh tmp.png --cycle-once \

--action1 "echo 8 >> cropparamsdetail" \

--action2 "echo 7 >> cropparamsdetail" \

--action3 "echo 6 >> cropparamsdetail" \

--action4 "echo 5 >> cropparamsdetail" \

--action5 "echo 4 >> cropparamsdetail" \

--action6 "echo 3 >> cropparamsdetail" \

--action7 "echo 2 >> cropparamsdetail" \

--action8 "echo 1 >> cropparamsdetail" \

--action9 "echo 0 >> cropparamsdetail"

fi

# If the side is the left or top side, skip the zoom crop procedure and write

# a 0.

else

echo 0 >> cropparamsdetail

fi

exit;

}

# If we already selected the 8 pixels wide strip in which the border is located,

# call the zoom function to specify precisely the height or width at which we

# will crop.

if [ "$1" == "zoom" ]; then

zoom $1

fi

# Check if filename was provided and file exists

if [ -z "$1" ]; then

echo "No filename provided"

exit

fi

if [ ! -f $1 ]; then

echo "File does not exist"

exit

fi

# Create directories

mkdir -p tmp

mkdir -p images_processed

# Get image width and height

filename=$1

width=$(mediainfo $1 | grep Width | tr -d ' ' | grep -o "[[:digit:]]*")

height=$(mediainfo $1 | grep Height | tr -d ' ' | grep -o "[[:digit:]]*")

# Move to temporary directory

cd tmp/

# For each of the corners of the images, extract the corner (see corner

# function) and write it to disk with vertical and once with horizontal lines

corner 0 $(($height-32)) vertical leftbottom

corner 0 $(($height-32)) horizontal bottomleft

corner 0 0 vertical lefttop

corner 0 0 horizontal topleft

corner $(($width-32)) 0 horizontal topright

corner $(($width-32)) 0 vertical righttop

corner $(($width-32)) $(($height-32)) vertical rightbottom

corner $(($width-32)) $(($height-32)) horizontal bottomright

# For each of the sides of the image, create an image containing the corners

# of the side.

montage lefttop.png leftbottom.png -tile 1x2 -geometry +0+0 left.png

montage topleft.png topright.png -geometry +0+0 top.png

montage righttop.png rightbottom.png -tile 1x2 -geometry +0+0 right.png

montage bottomleft.png bottomright.png -geometry +0+0 bottom.png

# For each of the side images just created, show the image and record the user

# input that tells in which strip the break is located.

feh left.png top.png right.png bottom.png --cycle-once \

--action1 'echo 8 >> cropparams && bash ../crop.sh zoom' \

--action2 'echo 16 >> cropparams && bash ../crop.sh zoom' \

--action3 'echo 24 >> cropparams && bash ../crop.sh zoom'

# Calculate the final cropping parameters (expressed as number of pixels from

# left/top/right/bottom).

paste -d \- cropparams cropparamsdetail | bc > cropparamsfinal

# Read the final cropping parameters into an array.

readarray -t cropparams < cropparamsfinal

# Remove the temporary directory

cd ..

rm -r tmp/

# Put each of the cropping parameters in a separate variable for convenience.

w=$(($width-${cropparams[0]}-${cropparams[2]}))

h=$(($height-${cropparams[1]}-${cropparams[3]}))

x=${cropparams[0]}

y=${cropparams[1]}

# Crop the image using jpegtran.

jpegtran -copy all -optimize -perfect -crop $w\x$h+$x+$y $filename > tmp.jpg

# Check if output file has the right dimensions

new_width=$(mediainfo tmp.jpg | grep Width | tr -d ' ' | grep -o "[[:digit:]]*")

new_height=$(mediainfo tmp.jpg | grep Height | tr -d ' ' | \

grep -o "[[:digit:]]*")

if [[ ! new_width -eq w ]]; then

echo "Width of output file is $new_width instead of $w"

exit

fi

if [[ ! new_height -eq h ]]; then

echo "Height of output file is $new_width instead of $w"

exit

fi

# Move the cropped image to images_processed and delete the original.

mv tmp.jpg images_processed/$filename

rm $filenameCropping them all

To crop all of the images, we simply loop over the images in the images_unprocessed/ directory, like this:

for path in images_unprocessed/*jpg; do

filename=$(echo $path | sed 's/images_unprocessed\///')

cp $path $filename

./crop.sh $filename

# If something went wrong during cropping, abort. Currently only checks

# whether the dimensions match the dimensions specified during cropping.

if [ -f error.token ]; then

echo "Aborting loop."

exit

fi

doneUploading

Once all the images have been cropped, pywikibot is used to upload them to Commons and to remove the {{Remove border}} template from page.

cd images_processed/

for f in *jpg; do

# Upload the image.

python ../../pywikibot/pwb.py upload -always -ignorewarn $f \

"removed borders (losslessly, using jpegtran)"

# Remove the \{\{Remove border\}\} template.

page=$(echo "File:$f")

python ../../pywikibot/pwb.py replace -always -nocase -regex \

-summary:"Removed \{\{remove border\}\} template" -page:$page \

"\{\{(crop|removeborder|remove frame|remove borders|remove border)\}\}" ""

done